SSH to EC2 instances via AWS Lambda

From AWS Lambda, SSH into your EC2 instances and run commands.

AWS Lambda lets you run arbitrary code without worrying about provisioning servers. I recently worked on a project where a Lambda function SSHed into an EC2 instance and ran some commands. This is a very powerful way to control access to your EC2 instances.

Why would you want to do this?#

There are a number of reasons for wanting to bridge access to your EC2 instances via an AWS Lambda function.

Using one or more Lambda functions as a proxy allows you to provide an HTTP based interface to existing functionality. Whether you leverage the AWS SDK or a Transposit operation to call a the Lambda function directly or you use an API gateway for HTTP access to your Lambda, you can take an operation that previously required SSH credentials and make it far more accessible.

You can also make it more secure. While traditional SSH access requires a user account with privileges managed by something like sudo, the unfortunate truth is that the attack surface of a shell account is very large. By using a proxy, you are limiting the commands that can be run to the subset you define. In addition, you're not allowing direct access so while a privilege escalation isn't impossible, it's much more difficult. Finally, AWS IAM allows fine grained access control; if you have two functions one of which restarts a process and the other of which reads process status, you can grant different roles and users different abilities to call these functions based on time, user or even IP address.

Finally, like any good adapter pattern, a Lambda proxy has minimum impact on the proxied resource. The application can continue to run without being modified; all you have to do is add a user identity with sufficient permissions that the Lambda can assume as well as assure network and shell access.

An alternative to this is AWS Systems Manager, which will allow you to run commands on your AWS EC2 instances with via the AWS API. This alternative does require installation of an agent on each instance.

How do you set this up?#

While the example below uses node, any of the other Lambda runtimes would work, as long as the language, or a library for the language, has support for SSH. (Also, this section borrows heavily from this 2017 article.)

Create a lambda execution role and attach the following two policies. Make sure to allow the normal access to CloudWatch Logs to allow the Lambda to log for debugging purposes. Also add the AWSLambdaVPCAccessExecutionRole managed policy to this role.

Here's the basic Lambda execution policy, which lets the Lambda output to CloudWatch Logs.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "logs:CreateLogGroup",

"Resource": "arn:aws:logs:us-west-2:1111111:*"

},

{

"Effect": "Allow",

"Action": [

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": [

"arn:aws:logs:us-west-2:1111111:log-group:/aws/lambda/function-name:*"

]

}

]

}Here's the AWSLambdaVPCAccessExecutionRole managed policy (as of publishing time) which lets you access the network information required for a Lambda to communicate with an EC2 instance:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents",

"ec2:CreateNetworkInterface",

"ec2:DescribeNetworkInterfaces",

"ec2:DeleteNetworkInterface"

],

"Resource": "*"

}

]

}Create the Lambda. The lowest memory setting is fine, but you'll want to increase the timeout from the default 3 seconds (I found 60 seconds ample). You'll also want to associate it with the VPC that your EC2 instances are in and at least two subnets within that VPC for higher availablity (more about Lambdas, subnets and VPCs).

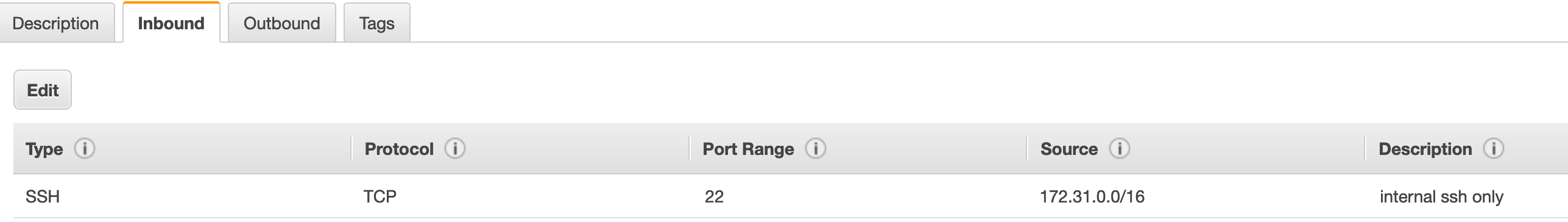

Make sure that your EC2 instance security groups allow SSH access from the CIDR block of the VPC subnets with which the Lambda has been associated. I set up the Lambda function to be in the 172.31.0.0/16 block in my VPC, so I want to make sure SSH is allowed from that IP block.

Here's the Lambda code:

exports.handler = async (event) => {

const fs = require('fs')

const SSH = require('simple-ssh');

const pemfile = 'my.pem';

const user = 'ec2-user';

const host = 'myinternaladdress.region.compute.internal';

// all this config could be passed in via the event

const ssh = new SSH({

host: host,

user: user,

key: fs.readFileSync(pemfile)

});

let cmd = "ls";

if (event.cmd == "long") {

cmd += " -l";

}

let prom = new Promise(function(resolve, reject) {

let ourout = "";

ssh.exec('your command', {

exit: function() {

ourout += "\nsuccessfully exited!";

resolve(ourout);

},

out: function(stdout) {

ourout += stdout;

}

}).start({

success: function() {

console.log("successful connection!");

},

fail: function(e) {

console.log("failed connection, boo");

console.log(e);

}

});

});

const res = await prom;

const response = {

statusCode: 200,

body: res,

};

return response;

};Some things worth noting about this code.

- The

user,host, andpemfilevariables are hardcoded. These could be pulled from other sources, including a configuration file, Lambda environment variables, or a secrets manager. Thepemfile, which points to an SSH private key, is assumed to be in the same directory as the Lambda. - The

hostvariable uses AWS internal hostnames. These names are constant throughout the lifetime of an instance, as opposed to public DNS names which, unless you use an elastic IP, will vary. - The command (

cmd) is hardcoded. I'd recommend having the command be unchangeable at runtime, otherwise you lose some of the security provided by the proxy. However, you can have the command take arguments at runtime. - The SSH call is wrapped in a promise because this is deployed as a synchronous Lambda. So we want to wait for the operation to complete so we can send back the results. If you are wrapping a longer running operation, you could set up the Lambda to be asynchronous and have the Lambda put a success or failure message on a message queue after completion.

- Because we are using the

simple-sshmodule, we need to deploy as a zip file. We also include the.pemfile; make sure it is readable by the Lambda. I had to make it world readable (chmod 444 my.pem) before zipping it up.

Here's the shell script to build the zip file and deploy it. This script assumes a few things:

- the script is in the directory above the Lambda code

- the Lambda code and the

my.pemfile live in a directory calledlambda npm install simple-sshhas been run inside thelambdadirectory- the AWS Lambda function has been created via the AWS console

#!/bin/bash

set -euo pipefail

IFS=$'\n\t'

FUNCTION_NAME=lambda-function-name

PROFILE_NAME=profile-which-can-update-lambda

REGION=us-west-2

cd lambda

zip ../lambda.zip -r .

aws --profile $PROFILE_NAME --region $REGION lambda update-function-code --function-name $FUNCTION_NAME --zip-file fileb://../lambda.zip

cd ..Finally, invoking this Lambda can be done in a number of different ways. You can:

- Wrap it with an API gateway, like AWS API Gateway, and have it accessible to any HTTP client

- Use a Transposit operation to invoke it

- Call it directly from an AWS SDK like boto3

- Attach it to a CloudFront distribution as long as your SSH and command line complete within the Lambda@Edge limits

- Call it using the the AWS CLI

For example, this is how you invoke the function using the AWS CLI:

aws --profile profile-name --region region-name lambda invoke --function-name function-name stdout-file.txtstdout-file.txt will contain the output from the function-name call.

Long running commands#

Depending on the use case, you may not be able to complete your command in the maximum Lambda run time of 15 minutes. If this is the case, you can use nohup or screen to start your command, which will allow it to continue running after the Lambda function disconnects. You can invoke the Lambda with an InvocationType of Event which will cause the Lambda to return immediately after invocation.

If you are kicking off a long running process, you'll typically want to have the command you are running fire off some kind of notice on completion or on failure. This could be to a webhook, another Lambda invocation, or some other message queue.

Security#

First, if possible, you should run the EC2 instances in a private subnet (that is, a subnet which doesn't provide public DNS or IP addresses to the EC2 instances). This means that your EC2 instances will be less exposed to attackers. Note that the EC2 instances in a private subnet can access the internet (unless you lock down outgoing network access). You can also lock down SSH access so that only instances within the subnet can SSH to these servers using a security group.

Second, while the above example bundles the my.pem file in zipfile, this is not the most secure practice. Anyone who can access the Lambda zip file will be able to get the private key and will have the ability to SSH in to the EC2 instances (security groups and network limits notwithstanding). Other more secure alternatives include:

- store the pem file in a S3 location to which only the Lambda has access

- store the file contents in an environment variable

- store the contents in the SSM parameter store

Finally, since you can run the SSH command by running the Lambda, you'll need to lock down Lambda invoation. Here's a sample IAM policy to that you can attach to a user, group or role to allow invocation of a specific Lambda function.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Allow<function-name>LambdaAccess",

"Effect": "Allow",

"Action": "lambda:InvokeFunction",

"Resource": "arn:aws:lambda:<region>:<123456789012>:function:<function-name>"

}

]

}One final note: please do not expose this Lambda without some kind of authentication layer (whether API Gateway, IAM or something else). If you don't have that layer, you are opening yourself up to a world of hurt^H^H^H^Hinsecurity.

Conclusion#

Allowing the running of a command over SSH allows you to constrain access to an EC2 based system while leveraging the AWS security infrastructure and exposing the access via the AWS SDK or an HTTP call. This brings additional flexibility to your systems without requiring substantial changes to the underlying operating environment.

Dan Moore · Dec 18th, 2019

Dan Moore · Dec 18th, 2019